Capturing the human voice in an AI-driven world

Recording and understanding the human voice is the foundation of meaningful social research. Technology should help us hear these voices better, not drown them out.

It wasn’t until the 1960s that recording audio became practical for the average researcher or journalist. Sixty-five years later, in 2025, we’re at another inflection point. AI tools can turn hours of audio into accurate transcripts in minutes, translate those transcripts into dozens of languages, and help us search, code, and analyze the data at scale. The implications for qualitative research are profound.

Why the voice matters

In qualitative research, the human voice provides nuance that numbers can’t capture. Qualitative studies often rely on semi-structured interviews and focus groups discussions. Unlike a quantitative survey – where responses are structured and discrete – qualitative interviews capture the richness of conversation: in-depth explanations, tone, pauses, laughter and local metaphors.

Depending on the participant and context, the same interview guide might yield a lively two hour discussion in one case, or a brief 30-minute chat in another. This variability is where the richness of qualitative research lies, and good transcription and translation preserve that richness. It captures not just words but tone and emotion, so that insights can be analyzed, cited, and acted upon.

Transcription and translation: past and present

Transcription has come a long way – from painstaking manual typing to AI-driven automation. For decades, transcription involved a human being, fluent in the language being spoken, listening to an audio recording and writing every word or pause by hand. If the research language differs from the interview language, the transcript would then be passed to a translator, another laborious step.

These processes are time-consuming and resource-intensive, making large-scale qualitative research difficult.

Today, AI transcription tools in high-resource languages like English, French, Spanish, or Chinese can generate very accurate transcripts in minutes. Similarly, AI translation models can instantly convert text between these languages with precision. The shift has drastically simplified workflows for researchers in some parts of the world.

Challenges in AI transcription for low-resource languages

For Laterite, and for others working in low-resource languages (e.g., Kinyarwanda, Amharic, Kiswahili, Afaan Oromo, etc.), numerous challenges remain. While the quality of AI outputs are improving, they’re not yet at the level qualitative researchers require. Many organizations are addressing these gaps, but as of November 2025, there is still a way to go.

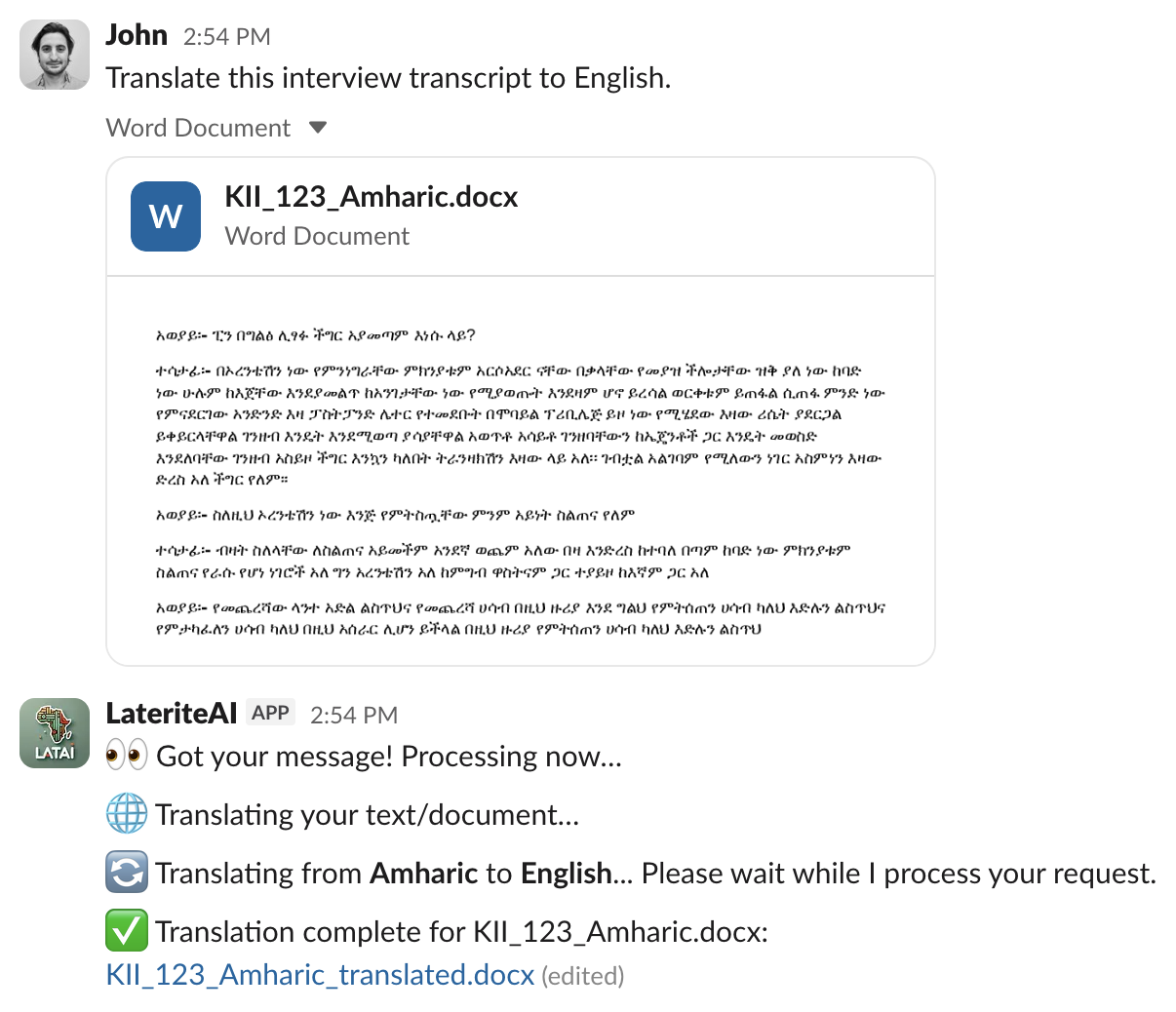

As part of our LateriteAI suite of tools, Laterite has developed in-house tools to automatically transcribe audio and translate text into the languages we commonly work in. Our experience building and testing these tools suggests that publicly available AI models still vary widely in performance:

Several factors contribute to these limitations:

- Poor audio quality: Background noise or speakers being too far from the microphone reduce accuracy.

- Multiple speakers: Focus group discussions often feature overlapping voices, which AI models struggle to capture.

- Long recordings: Many AI audio models can’t process audio files longer than one hour without trimming for silence, or chunking into manageable sizes, all of which have quality trade-offs.

- Multiple languages (code-switching): In many instances participants naturally switch between multiple languages. In Nairobi, for example, participants speak ‘Sheng’, a mix of Swahili and English. This could be easily interpreted by a human, but is a challenge for AI models.

- Consent and privacy: You should never share voice recordings with an AI without participant consent. If you didn’t get consent, you may be stuck doing this manually.

Laterite’s tips for transcription and translation

Based on our experience developing these tools for LateriteAI, here are some tips for others working in low-resource language contexts.

-

Always conduct a human review

Even the best AI models need human oversight. A fluent reviewer should check every transcript to ensure the quality and contexts are preserved. The time it takes to do a full human review should still be vastly less than the time it would take to manually transcribe and translate.

-

Evaluate time savings versus quality

If AI gets you 80% of the way to a usable transcript, then human reviews can close the gap efficiently. If you suspect it will take longer to correct the output than to do it all from scratch, skip the AI entirely.

-

Focus on audio quality

Train data collectors on the importance of high audio quality and how to maximize it, including microphone placement and venue selection to avoid background noise. Better inputs lead to more reliable AI outputs.

-

Perform quality checks like double or back translations

To verify meaning and accuracy, you could have an AI and a human transcribe or translate the same audio file independently and compare the final products. For translations, another option is to conduct a back-translation on the translated text, and compare it to the original.

-

Get consent and strip personally identifiable information

Always get participant consent before uploading audio to an AI tool. Remove names, addresses, or identifying details from transcripts, and confirm that AI outputs do not contain any PII. Always have a human being review transcripts to ensure PII is removed. Ensure that whatever AI model you are using is not saving and storing your data to train their models.

Opportunities and reflections

Imagine being able to conduct large-scale qualitative studies with a sample of thousands of participants. This was something previously unthinkable. Advancements in technology have the potential to open up entirely new forms of qualitative research.

While we should remain cautious about automating every step of the research process, we should also take advantage of these opportunities to do better and more research. AI-assisted transcription and translation will eventually make it possible for researchers to conduct more focus group discussions and more interviews with the same resources, engaging more participants and generating richer insights.

In an increasingly AI-driven world, let’s remember: there are human beings on the other side of the microphone. Our job as social researchers is to hear them clearly and make sure their perspectives shape what happens next.

This blog is written by John DiGiacomo, Director of Analytics at Laterite.